Past Work

Inertial Navigation and Mapping for Autonomous Vehicles

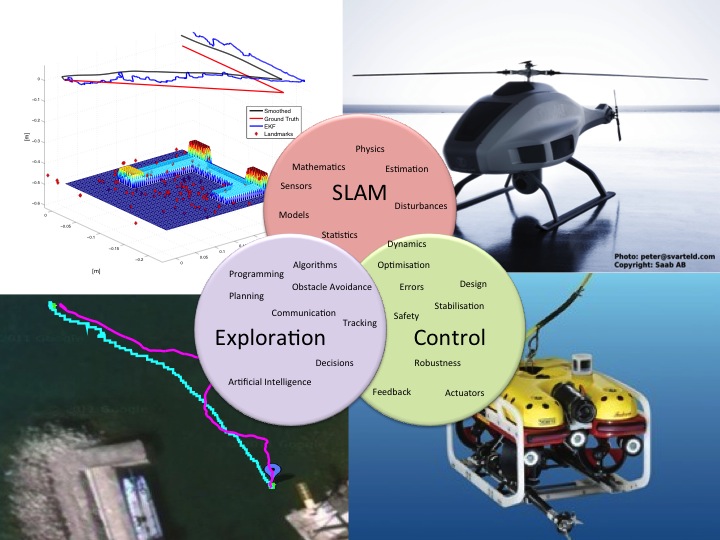

Navigation and mapping in unknown environments is an important building block for increased autonomy of unmanned vehicles, since external positioning systems can be susceptible to interference or simply being inaccessible. Navigation and mapping require signal processing of vehicle sensor data to estimate motion relative to the surrounding environment and to simultaneously estimate various properties of the surrounding environment. Physical models of sensors, vehicle motion and external influences are used in conjunction with statistically motivated methods to solve these problems. The thesis by Martin Skoglund mainly addresses three navigation and mapping problems which are described below.

We study how a vessel with known magnetic signature and a sensor network with magnetometers can be used to determine the sensor positions and simultaneously determine the vessel's route in an extended Kalman filter (EKF). This is a so-called simultaneous localisation and mapping (SLAM) problem with a reversed measurement relationship.

Previously determined hydrodynamic models for a remotely operated vehicle (ROV) are used together with the vessel's sensors to improve the navigation performance using an EKF. Data from sea trials is used to evaluate the system and the results show that especially the linear velocity relative to the water can be accurately determined.

The third problem addressed is SLAM with inertial sensors, accelerometers and gyroscopes, and an optical camera contained in a single sensor unit. This problem spans over three publications.

We study how a SLAM estimate, consisting of a point cloud map, the sensor unit's three dimensional trajectory and speed as well as its orientation, can be improved by solving a nonlinear least-squares (NLS) problem. NLS minimisation of the predicted motion error and the predicted point cloud coordinates given all camera measurements is initialised using EKF-SLAM.

We show how NLS-SLAM can be initialised as a sequence of almost uncoupled problems with simple and often linear solutions. It also scales much better to larger data sets than EKF-SLAM. The results obtained using NLS-SLAM are significantly better using the proposed initialisation method than if started from arbitrary points. A SLAM formulation using the expectation maximisation (EM) algorithm is proposed. EM splits the original problem into two simpler problems and solves them iteratively. Here the platform motion is one problem and the landmark map is the other. The first problem is solved using an extended Rauch-Tung-Striebel smoother while the second problem is solved with a quasi-Newton method. The results using EM-SLAM are better than NLS-SLAM both in terms of accuracy and complexity.

Navigation and Mapping for Aerial Vehicles Based on Inertial and Imaging Sensors

Small and medium sized Unmanned Aerial Vehicles (UAV) are today used in military missions, and will in the future find many new application areas such as surveillance for exploration and security. To enable all these foreseen applications, the UAV's have to be cheap and of low weight, which restrict the sensors that can be used for navigation and surveillance. The thesis by Zoran Sjanic investigates several aspects of how fusion of navigation and imaging sensors can improve both tasks at a level that would require much more expensive sensors with the traditional approach of separating the navigation system from the applications. The core idea is that vision sensors can support the navigation system by providing odometric information of the motion, while the navigation system can support the vision algorithms, used to map the surrounding environment, to be more efficient. The unified framework for this kind of approach is called Simultaneous Localisation and Mapping (SLAM) and it will be applied here to inertial sensors, radar and optical camera.

Synthetic Aperture Radar (SAR) uses a radar and the motion of the UAV to provide an image of the microwave reflectivity of the ground. SAR images are a good complement to optical images, giving an all-weather surveillance capability, but they require an accurate navigation system to be focused which is not the case with typical UAV sensors. However, by using the inertial sensors, measuring UAV's motion, and information from the SAR images, measuring how image quality depends on the UAV's motion, both higher navigation accuracy and, consequently, more focused images can be obtained. The fusion of these sensors can be performed in both batch and sequential form. For the first approach, we propose an optimisation formulation of the navigation and focusing problem while the second one results in a filtering approach. For the optimisation method the measurement of the focus in processed SAR images is performed with the image entropy and with an image matching approach, where SAR images are matched to the map of the area. In the proposed filtering method the motion information is estimated from the raw radar data and it corresponds to the time derivative of the range between UAV and the imaged scene, which can be related to the motion of the UAV.

Another imaging sensor that has been exploited in this framework is an ordinary optical camera. Similar to the SAR case, camera images and inertial sensors can also be used to support the navigation estimate and simultaneously build a three-dimensional map of the observed environment, so called inertial/visual SLAM. Also here, the problem is posed in optimisation framework leading to batch Maximum Likelihood (ML) estimate of the navigation parameters and the map. The ML problem is solved in both the straight-forward way, resulting in nonlinear least squares where both map and navigation parameters are considered as parameters, and with the Expectation-Maximisation (EM) approach. In the EM approach, all unknown variables are split into two sets, hidden variables and actual parameters, and in this case the map is considered as parameters and the navigation states are seen as hidden variables. This split enables the total problem to be solved computationally cheaper then the original ML formulation. Both optimisation problems mentioned above are nonlinear and non-convex requiring good initial solution in order to obtain good parameter estimate. For this purpose a method for initialisation of inertial/visual SLAM is devised where the conditional linear structure of the problem is used to obtain the initial estimate of the parameters. The benefits and performance improvements of the methods are illustrated on both simulated and real data.